By trait I am an electronics engineer, although I have never worked in that field and never will, some of that education still remains with my interests and somehow effects my life.

One of these things is computers and the things one can do utilising them.

Back in 2016 I bought my first home server, a pre-built Dell PowerEdge T20 that still runs today, and when I got my house back in 2020 I got two more servers as the Dells computing power was rather limited. I got my self two prebuilt Asus ones out from a company seizure, each sporting 4 TB of SAS drives, Adaptec raid controllers, 32GB of DDR4 ECC memory, dual Intel Xenon CPUs with each 6 cores and redundant power-supplies.

Now, for everyone accusing me of overkill here, yes, you are right, but also I paid 80€ for each of these, so running stuff on a raspberry pi would have cost me more for less power.

Anyway, this February the first power supply blew and given they all ran about the same time, it was certain the others would follow soon, so I looked them up and had to sit down for a while. They aren’t built any more and on the secondary market they are at least 300€ each.

Thus I was faced with the choice of spending 1200€ to keep these 160€ in total machines running or to invest in a new machine.

As you might have guessed from the title I got a new one. And this time, I built it myself.

So what are we looking at:

Hardware:

CPU: Intel Core i5 – 12400

Cooler: Noctua NH-L9

Ram: 64GB DDR4-3200

Mainboard: ASRock Z690

Boot Drive: Samsung SSD 980 500GB

Data Drives: 4 Seagate IronWolf Pro 4TB

Power supply: be quiet! Systempower 9 600W

Case: Inter-Tech IPC 3U-3508

All of which cost me less then the power supplies for the old machines would have.

Software:

I installed Ubuntu 22.04 LTS on the machine, configured the Datadrives as one huge LVM and boot the OS from the SSD.

Applications are run via docker containers as well as two virtual machines running Windows 11 and a Ubuntu machine for PiHole as a local DNS server and network wide Ad-Blocker.

The building experience:

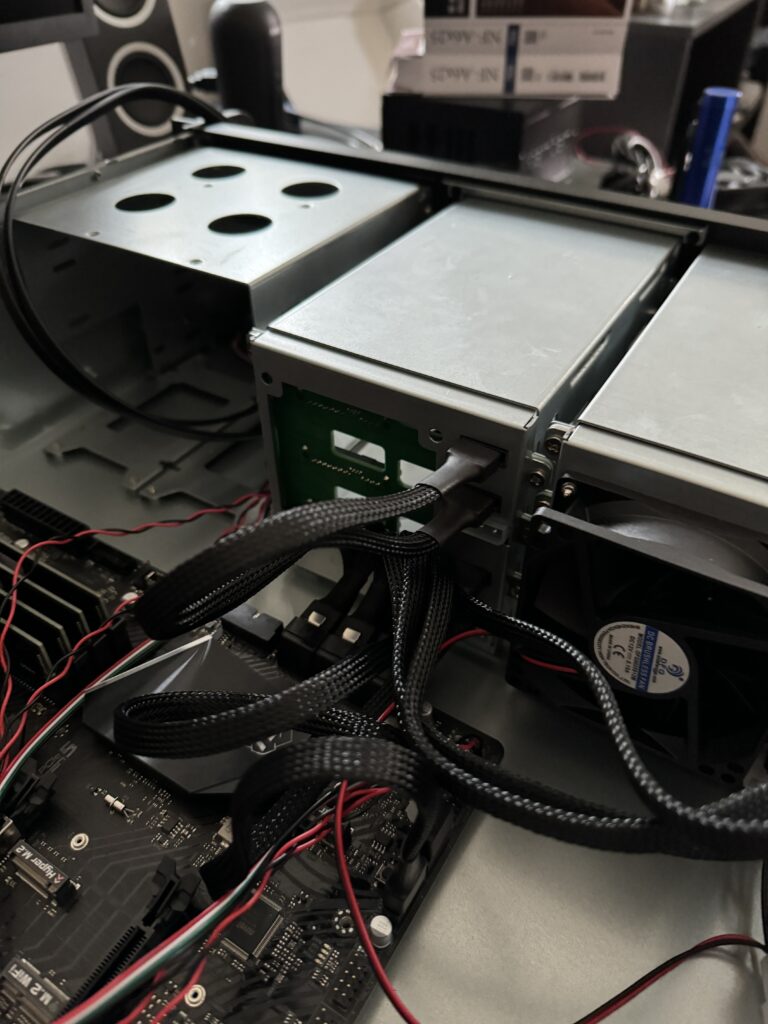

All in all I am happy with the case, but if you are planning on getting the same case, let me advise you to get a different mainboard form from factor. The specification states it fits ATX mainboards and it does, but the mainboard reaches beneath were the power supply sits, thus blocking access to any of the pins in this area. Also the SATA ports are placed exactly where the drive cage is installed and I had to remove one of the fans to be able to connect everything all the while being afraid to break the mainboard in the process given how tight the fit was. See picture below.

I also had to order additional SATA cables as the mainboard only came with one while having 8 ports and two case fans, since those also where missing.

But, a couple of cuts in my fingers from the cases sharp edges and after figuring out, that for some reason the integrated GPU did not put out to the onboard HDMI port and installing the old graphics card I had in my desktop PC, I got everything fitted and working.

How things are going:

By now the server has been running for a month and not given me any issues, I have thus far moved HomeAssistant, my survailence DVR and Roundcube to it, as well as two Windows 11 machines and as mentioned before, a Ubuntu server for PiHole, to this machine.

So how does it perform?

In terms of threads this little box is equal to the two servers that went out of production as well as in terms of RAM. Given that the per core performance of this i5 is about double of the old CPUs, it is currently bored with the tasks it handles, it is fast, runs cool and silent and all in all is a huge improvement over my prior setup.

But here is the biggest improvement: Each of my two old rack servers drew around 200W from the wall. This one, doing the same tasks: 80W.

I can’t help but be impressed by how much the performance by watt has improved in recent years, or actually the performance of chips in general.

I mean, think about it, a single consumer grade i5, that is two generations old, is now capable of carrying the same load as 4 enterprise chips from yesteryear at a fifth of the consumed power.

I didn’t plan on putting a dedicated GPU into this machine, but as it turns out, I can utilise the little radeon card for object detection on my dvr and also for transcoding video streams from my jellyfin server.

All in all I would say that my old hardware dying on me was a good thing, as this machine performs way better than I imagined at a power-profile that is almost negligible.

Also, now that I am running everything on Noctua fans, you’d be hard pressed to make out sounds, while the old machines made it almost impossible to stay in the same room for a long time.

Conclusion:

First up, always make proper backups, a power supply blew on me and while the machine itself was still working because it had two, this could have been a huge loss of data. Offline backups are worth it, trust me.

Never trust hardware raids. I tried connecting the drives to a second raid controller of the same make, but different firmware and it couldn’t read the drives.

You don’t have to spend a fortune on a home server, modern day consumer hardware is more than capable of handling your homelab load.

Also: Computers, free software, smart homes and self-hosted solutions are rather cheap if you compare the running cost of cloud solutions to the one time investment on the hardware and energy costs of modern hardware. Give it a try, you won’t be disappointed.